xgboost bayesian optimization

The test accuracy and a list of Bayesian Optimization result is returned. Def xgboostcv max_depth learning_rate n_estimators.

Bayesian Model Based Optimization In R R Bloggers

To present Bayesian optimization in action we use BayesianOptimization 3 library written in Python to tune hyperparameters of Random Forest and XGBoost.

. This shows how to read the text representing a map of Chicago in. This optimization function will take the tuning parameters as input and will return the best cross validation results ie the highest AUC score for this case. The Bayesian Optimization algorithm can be summarized as follows.

Select a Sample by Optimizing the Acquisition Function. Cross_validation import KFold import xgboost as xgb. Explore and run machine learning code with Kaggle Notebooks Using data from New York City Taxi Fare Prediction.

The algorithm has two. This function estimates parameters for xgboost based on bayesian optimization. Using LightGBM and XGBoost to solve a regression problem and through algorithm of Bayesian-Optimization to optimize.

Bayesian optimization for Hyperparameter Tuning of XGboost classifier In this approach we will use a data set for which we have already completed an initial analysis and exploration of a. Tutorial Bayesian Optimization with XGBoost Notebook Data Logs Comments 14 Competition Notebook 30 Days of ML Run 118265 s - GPU history 18 of 18 License This. As we are using the non Scikit-learn version of XGBoost there are some modification required from the previous code as opposed to a straightforward drop in for.

1 I am able to successfully improve the performance of my XGBoost model through Bayesian optimization but the best I can achieve through Bayesian optimization when using Light GBM. Santander Customer Transaction Prediction. XGBoost classification bayesian optimization Raw xgb_bayes_optpy from bayes_opt import BayesianOptimization from sklearn.

History 21 of 21. The XGBoost optimal hyperparameters were achieved through Bayesian optimization and the Bayesian optimization acquisition function was improved to prevent. Introduction to Bayesian Optimization.

Evaluate the Sample With the Objective. The xgboost interface accepts matrices X. The XGBoost models were optimized using Bayesian Optimization BO while the training quality was evaluated by leave-one-out cross-validation LOOCV.

Heres how we can speed up hyperparameter tuning with 1 Bayesian optimization with Hyperopt and Optuna running on 2 the Ray distributed machine learning framework. Apr 25 2019 Competition Notebook. Best_Value the value of.

By default the optimizer runs for for 160 iterations or 1 hour. Objective Function Search Space and random_state. Bayesian optimization function takes 3 inputs.

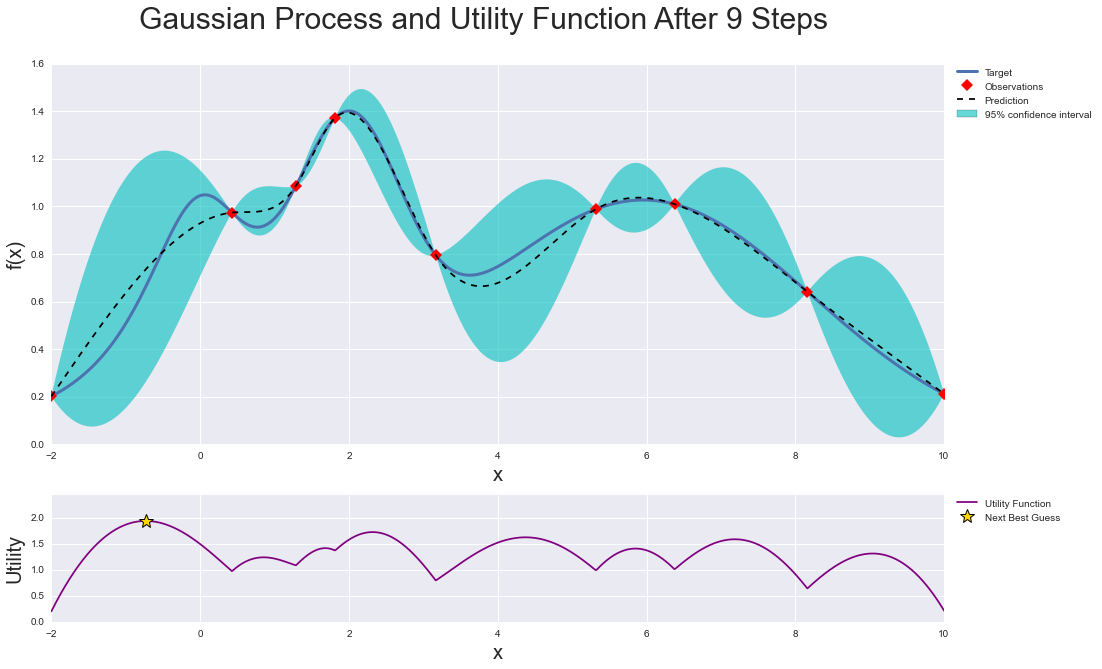

Best_Par a named vector of the best hyperparameter set found. 100798 s - GPU. Bayesian optimization is a fascinating algorithm because it proposes new tentative values based on the probability of finding something better.

Im trying to perform Bayesian optimization for tuning parameters for XGBoost Regressor following this code. Finding optimal parameters Now we can start to run some optimisations using the ParBayesianOptimization package. Xgb_opt train_data train_label test_data test_label objectfun evalmetric eta_range c 01 1L.

Lets implement Bayesian optimization for boosting machine learning. A paper on Bayesian Optimization.

Bayesian Model Based Optimization In R R Bloggers

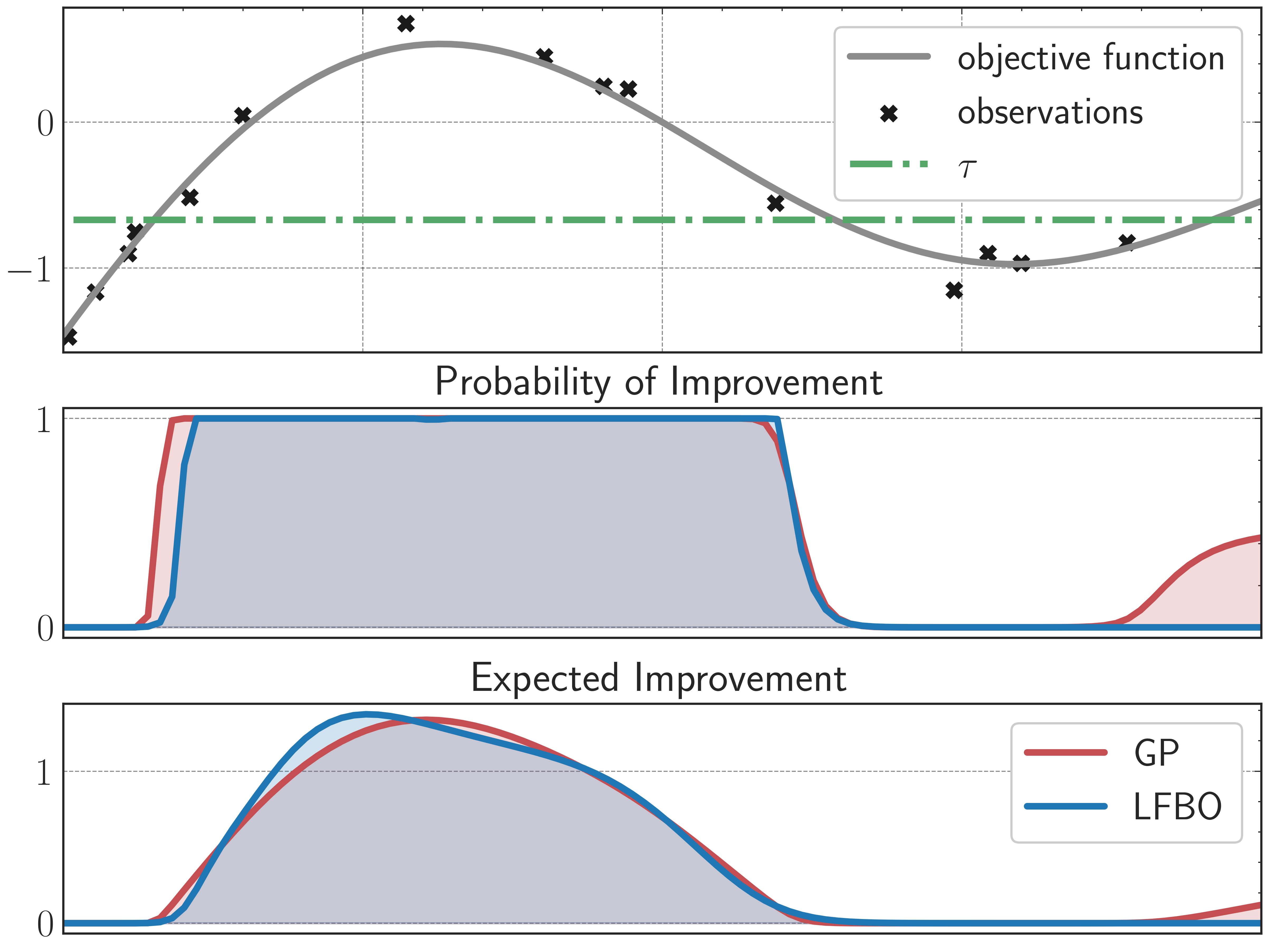

A General Recipe For Likelihood Free Bayesian Optimization

Bore Bayesian Optimization By Density Ratio Estimation Louis Tiao

Bayesian Hyperparameter Optimization Of Gradient Boosting Machine By Maslova Victoria Medium

About Bayesian Optimization Bayeso 0 5 0 Alpha Documentation

Bore Bayesian Optimization By Density Ratio Estimation Louis Tiao

Revolutionizing Membrane Design Using Machine Learning Bayesian Optimization Environmental Science Technology

Bayesian Hyperparameter Optimization Youtube

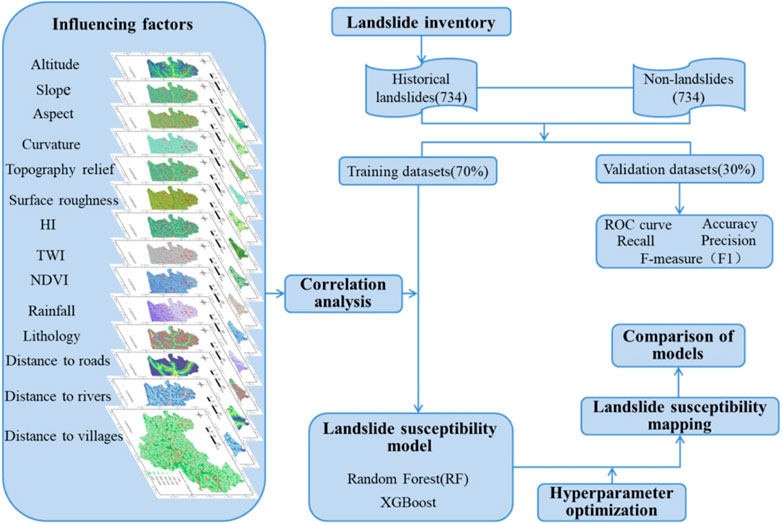

Frontiers Application Of Bayesian Hyperparameter Optimized Random Forest And Xgboost Model For Landslide Susceptibility Mapping

The Progress Of Bayesian Optimization For Tuning Hyperparameters Of Svr Download Scientific Diagram

Bayesian Optimization For Accelerating Hyper Parameter Tuning Semantic Scholar

Bayesian Hyperparameter Optimization Of Gradient Boosting Machine By Maslova Victoria Medium

Tensorflow Model Optimization Toolkit Post Training Integer Quantization Optimization Integer Operations Integers

Bayesian Optimization Martin Krasser S Blog

20 Fold Cross Validation Of Xgboost Model For A Set Of Hyper Parameters Download Scientific Diagram

Hyperparameter Optimization Using Bayesian Optimization By Matias Aravena Gamboa Spikelab Medium

Bayesian Optimization For Accelerating Hyper Parameter Tuning Semantic Scholar

Bayesian Optimization Martin Krasser S Blog

0 Response to "xgboost bayesian optimization"

Post a Comment